| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | ||||

| 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| 11 | 12 | 13 | 14 | 15 | 16 | 17 |

| 18 | 19 | 20 | 21 | 22 | 23 | 24 |

| 25 | 26 | 27 | 28 | 29 | 30 | 31 |

- Linear algebra

- pycharmerror

- MacOS

- 선대

- 맥북원스토어

- monoculardepthestimation

- CS231nSVM

- professor strang

- RegionProposalNetworks

- ㅐㅕ세ㅕㅅ

- BOJ

- adversarialattackonmonoculardepthestimation

- BAEKJOON

- CS231nAssignments

- CS231ntwolayerneuralnet

- Gilbert Strang

- CNNarchitecture

- CS231n

- Algorithm

- gpumemory

- ios원스토어

- MIT

- CS231nAssignment1

- arm칩에안드로이드

- 백준

- 맥실리콘

- 아이폰원스토어

- 백준알고리즘

- CNN구조정리

- 선형대수학

- Today

- Total

목록CS231n (5)

개발로 하는 개발

[CS231n] Assignment 1 - Two Layer Net

[CS231n] Assignment 1 - Two Layer Net

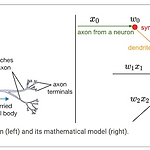

Two Layer Net biological neuron vs mathematical input&output of neuron - activation function (or non-linearity) takes a single number and performs a certain fixed mathematical operation on it - fully-connected layer in which neurons between two adjacent layers are fully pairwise connected, but neurons within a single layer share no connections - Each Layer usually matrix multiplication with acti..

[CS231n] Assignment 1 - Softmax

[CS231n] Assignment 1 - Softmax

Softmax Another popular classifier (like SVM) Generalized version of binary Logistic Regression classifier - Softmax function : - Loss function : cross-entropy function - Numerical Stability : exponential -> very large number -> normalize values - SVM vs Softmax Same score function Wx = b, different loss function. Softmax : Calculate probabilities for each classes. Easier to interpret. Softmax.p..

[CS231n] Assignment 1 - SVM

[CS231n] Assignment 1 - SVM

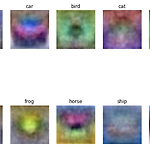

KNN - space inefficient : have to remember all the data in the training set - classifying is expensive : must calculate all the distances to all of the training set -> Use SVM SVM Linear Classification - Score function, Loss function 사용 : minimize the loss function with respect to the parameters of the score function. CIFAR-10 we have a training set of N = 50,000 images, each with D = 32 x 32 x ..

[CS231n] Assignment 1 - KNN

[CS231n] Assignment 1 - KNN

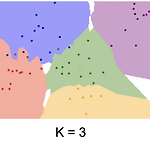

- KNN ( K nearest neighbor) hyperparameter : k, L1 or L2 (distance calculating formula) Basically, you are trying to figure out which dot belongs to what region. And you are determining this by calculating the distance between the test point and train points. You get the value of k nearest points, and decide whichever the majority is. There are two ways to calculate this. L1 and L2. in the assig..

[CS231n] Assignment python 정리

[CS231n] Assignment python 정리

1. array끼리 operation import numpy as np # create two arrays arr1 = np.array([1, 2, 3]) arr2 = np.array([4, 5, 6]) # add the two arrays together result = arr1 + arr2 print(result) 2. 2d array operation import numpy as np # create two 2D arrays arr1 = np.array([[1, 2], [3, 4]]) arr2 = np.array([[5, 6], [7, 8]]) # add the two arrays together result = arr1 + arr2 print(result) 3. pow 제곱 연산 pow(base ..